Google is gearing up to change the AI game in a big way, and it could spell trouble for many AI companies.

The tech giant’s latest move? Integrating their Gemini Nano AI model directly into Chrome. This isn’t just another browser update—this is a strategic play that could redefine how AI is accessed and used on the web.

Built-in AI for Chrome

When developing AI-powered features for the web, we often turn to server-side solutions, especially for handling larger models. This is particularly true for generative AI, where even the smallest models can be roughly a thousand times larger than the average web page. The challenge isn’t limited to generative AI—many AI models, ranging from tens to hundreds of megabytes, pose similar issues.

Since these models aren’t shared across websites, each site needs to download them individually when the page loads. This approach is inefficient and burdensome for both developers and users.

Google recognizes this challenge and is actively developing solutions to address it. As they state:

“While server-side AI is a great option for large models, on-device and hybrid approaches have their own compelling upsides. To make these approaches viable, we need to address model size and model delivery.”

To this end, Google is introducing web platform APIs and browser features designed to integrate AI models directly into the browser:

“That’s why we’re developing web platform APIs and browser features designed to integrate AI models, including large language models (LLMs), directly into the browser. This includes Gemini Nano, the most efficient version of the Gemini family of LLMs, designed to run locally on most modern desktop and laptop computers. With built-in AI, your website or web application can perform AI-powered tasks without needing to deploy or manage its own AI models.”

This strategic move by Google highlights its commitment to enhancing web-based AI capabilities by reducing reliance on server-side solutions and enabling powerful on-device processing.

The Reality of Gemini Nano in Chrome

Google has introduced Gemini Nano to bring AI directly to users’ devices through Chrome. The promise is that it will enable faster, more private AI-powered features without relying on massive server-side models. However, the execution leaves much to be desired.

Gemini Nano is designed to run on local devices, but its current capabilities are limited. While it’s meant to offer the convenience of on-device processing, the model’s efficiency and effectiveness have yet to prove themselves in real-world applications. This isn’t the seamless, groundbreaking technology some might expect—at least not yet.

Benefits of built-in AI

Compared to implementing AI on your own devices, built-in AI provides several key advantages:

- Simplified deployment: The browser handles model distribution, taking into account the device’s capabilities and managing updates automatically. This frees you from the burden of downloading or updating large models over the network. You also avoid dealing with issues like storage management, memory constraints, and serving costs.

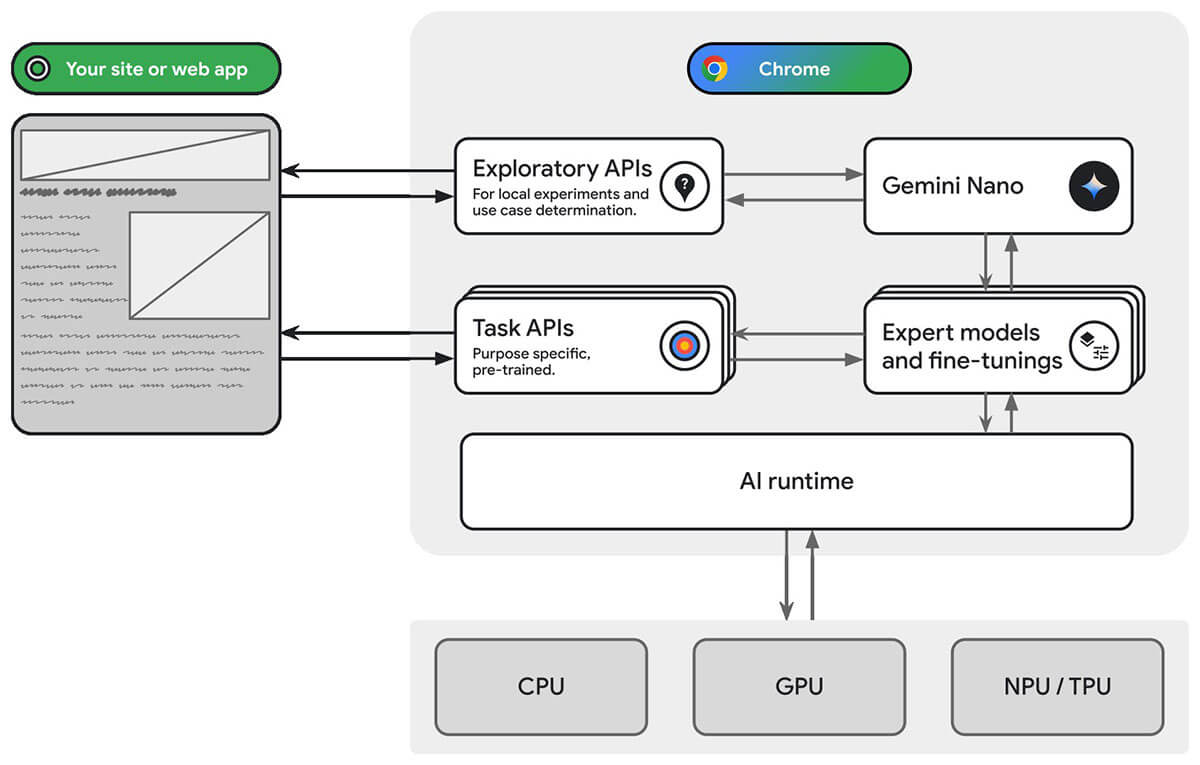

- Optimized hardware utilization: The browser’s AI runtime is designed to leverage the full potential of available hardware—whether it’s a GPU, NPU, or even the CPU. This ensures your app achieves the best possible performance on any device.

This diagram demonstrates how a website can use task and exploratory web platform APIs to access models built into Chrome.

Early Days

Chrome has started rolling out the built-in Gemini Nano, and I’ve had the opportunity to test it through the early access program. Here’s how a web developer can start using Gemini Nano with Chrome’s developer tools console—no API keys, no third-party vendors needed.

What happens if we can start using AI directly in our browser? Is Google on the verge of catching up in the AI race? Only time will tell.